The validation process step is a verification procedure of the simulation model to verify if it is adequate for its purpose [1]. In this method, it may validate results from the simulation comparing with real application results (if available) or with another simulation.

We assume that if each algorithm can be validate separately, the resulting simulation (with all algorithms together) may be considered accurate enough for the planned study.

Example: In our initial studies of impact from a network in cooperative tasks, the network aspects of our simulation was considered valid as we are using the standard OMNeT++ model, which was utilized in several works cited in OMNeT++ homepage. But the validation process was necessary for both topology control and cooperative control, comparing an ideal network in OMNeT++ with a previous Matlab simulation (that didn't had any network implementation). This was necessary as both were re-implemented from scratch in OMNeT++.

There are more than 100 validation, verification and test (VV&T) techniques for modelling and simulation (M&S) [2] and it is not feasible to use them all in each simulation study. Moreover, if we look at Agent Based Modelling and Simulation (ABMS), which is the closest existing application to cooperative robotics, we will notice several particularities that also should be considered. For example, we should consider complexities from the non linearity nature of the process, from the lack of data that may affect the statistical validation and from high/low level split from the system.

We may classify the VV&T techniques in four categories:

➤ Formal: relies on mathematical proves.

➤ Statistical: techniques that verifies precision using statistical models.

➤ Dynamic: techniques that make make computacional behavior analysis and may rely on extra instrumentation / implementation to collect data.

During this process, we want to avoid three general errors of VV&T [3][4]:

⧳ Type I Error: when we reject a valid model (simulation);

⧳ Type II Error: when we accept a invalid model (simulation);

⧳ Type III Error: we accept a valid model, but the model is irrelevant for the study objetive. This mainly happens when a documentation phase was not done correctly.

4 Step Validation Process

We decided to use the 4 Step Validation for ABMS made by [1], consisting in one informal technique, two dynamic and one statistical. We merge this procedure with the statistical validation for simulations from [5], by using confidence interval techniques in the statistical step. The four steps are:

📘 Face Validation: using all documents made so far and with the first simulation results, we can make a visual check of the results, looking for any easy observable inconsistencies. It can be further divided in:

➤ Animation Assessment : process of observing graphs, animations, diagrams or any other visual aspect of simulation behavior, looking by any inconsistency;

➤ Output Assessment: here we observe the simulation output plausibility;

➤ Immersive Assessment: the last assessment is to look more deeply in the simulation behavior, looking for robots paths, variables or other information that can infer if the simulation is running OK or not.

📗 Sensibility Analysis: the second step is the sensibility analysis, which in our case, we understand as a change in parameters to verify if both simulations have similar changes in the results;

📕 Calibration: the third step would be to do smalls adjustments in the simulation. We also can use this step to calibrate a "standard" desirable operation point. Or in other words, adjust parameter values to have that best conservative value that represent a real system utilization. For example, we can choose a smaller velocity limit for robots or adjust a robot communication range.

📙 Statistical Validation: the last step is to apply a statistical validation technique to analyse the simulation precision. This step is further detailed in the following topic.

We adopt the confidence interval (CI) statistical validation presented in [5], which was applied by the author in simulations in general. Here, we made only some minor adaptations for cooperative robotics analysis.

The author suggest the building one of the two IC presented in the book. However, here we describe the first, denominated t-paired, utilized when we have the same number of samples between the simulations. Please refer to [5] for the case of uneven samples.

Let R1 and R2 be the results (from the evaluation parameter) from the same situation simulated on different simulation frameworks (in most cases, defined by initial conditions), and Zj = R1 – R2 as the error achieved in an experiment j of n total experiments. The mean and variance can be obtained as indicated in (1) and (2) respectively:

The confidence interval is built using (3) and the t-student distribution parameters as we usually have less the 30 samples, with the above calculated parameters and a constant t, which indicates the critical points of a t-student distribution and is obtained from a t-table [5] (4) as function of n-1 samples and the 1-α/2 critical points from 100*(1-α) desired percent confidence.

Here we present some values for t (4), although the complete table can be checked in [5] or over internet (link).

The resulting interval can be assessed in two ways: statistical and scale significance.

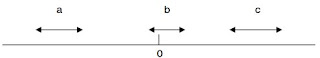

If the confidence interval contains zero, it means it is not statistically significant and both results may be considered approximately the same, or in other words, the simulation under test is validated with the reference (case b). However, if the CI does not include zero, it means that one simulation is affecting the results (case a and case c). The three cases are represent bellow:

We also need to observe the scale of the error, since sometimes the difference is statistically significant but the absolute error value is insignificant.

_________________________________________________________________________________

Example

Lets say we have a cooperative group of robots doing a decentralized rendez-vous task (they should agree and go to the meeting point) without any reference point. We have a simulation from Matlab where the algorithm was developed and we want to validate its OMNeT++ implementation version.

We select 10 initial conditions with at least a minimum spawning tree (there is at least one path from one robot to any other robot).

It is also utilized 2 distinct topology controls (TCs), a fixed where communication links are the same as the initial conditions established and do not change, and a dynamic one, where links are establish as robots enter in communication range.

No other parameter is changed and each initial condition is exactly the same on both simulators.

After the 20 simulations in both simulators (10 for fixed and 10 for dynamic TCs), we have the following convergence time (using the same stop criteria):

_________________________________________________________________________________

Example

Lets say we have a cooperative group of robots doing a decentralized rendez-vous task (they should agree and go to the meeting point) without any reference point. We have a simulation from Matlab where the algorithm was developed and we want to validate its OMNeT++ implementation version.

We select 10 initial conditions with at least a minimum spawning tree (there is at least one path from one robot to any other robot).

It is also utilized 2 distinct topology controls (TCs), a fixed where communication links are the same as the initial conditions established and do not change, and a dynamic one, where links are establish as robots enter in communication range.

No other parameter is changed and each initial condition is exactly the same on both simulators.

After the 20 simulations in both simulators (10 for fixed and 10 for dynamic TCs), we have the following convergence time (using the same stop criteria):

Using (1), (2) and (3), for 99% confidence interval:

To calculate t, we have 10 samples and α = 0.01 (99% / 100)

So we search the table for 10-1 samples (9) and for 1-α/2 = 0,995

Resulting in t = 3,25

Confidence Intervals:

Fixed [-0,213, 3,609]

Dynamic [-0,749 0,447]

If the simulation is considered valid, it will become our new reference simulation for the study cases, as we are going to compare how the changes in parameters or algorithms affects the task results. The following step is to setup the experimental design for the study, which is in our next topic.To calculate t, we have 10 samples and α = 0.01 (99% / 100)

So we search the table for 10-1 samples (9) and for 1-α/2 = 0,995

Resulting in t = 3,25

Confidence Intervals:

Fixed [-0,213, 3,609]

Dynamic [-0,749 0,447]

Even with some points having differences (mainly due the presence of TDMA on OMNeT++), the overall analysis from ICs include zero, so we can say that both simulations in both cases are accurate enough for the study. These results are real results we had from our first simulation, but we did improve it by adding TDMA behavior on MATLAB, reducing the difference from the initial experiments.

_________________________________________________________________________________

References

[1] Klugl, F. et al. “A validation methodology for agent-based simulations”, in Proceedings of the 2008 ACM Symposium on Applied Computing (SAC), DOI: 10.1145/1363686.1363696, 2008.

[2] Balci, O. "Introduction to Modeling and Simulation". Class Slides, ACM SIGSIM, Available in <http://www.acm-sigsim-mskr.org/Courseware/Balci/introToMS.htm>, 2013.

[3] Sokolowski, J. A. and Banks, C. M. "Modeling and Simulation Fundamentals: Theorical Underpinnings and Practical Domains", John Wiley & Sons, ISBN 978-0-470-48674-0, 2010.

[4] Sargent, R. G. “Verification and validation of simulation models”, in Journal of Simulation, 7, 12-24, 2013.

[5] Law, A. M. “Simulation Modelling and Analysis”, McGraw-Hill, 5th Edition, pp. 804, 2015.

[6] Robinson, S. Simulation: The Practice of Model Development and Use", 2ª edição, ISBN 9781137328021, Palgrave Macmillan, 2014.

[7] Siegfried, R. "Modeling and Simulation of Complex Systems", Springer Vieweg, ISBN 978-3-658-07528-6, 2014.

Last Update: 11/08/17